Hybrid Deep Learning approach for Lane Detection

Zarogiannis Dimitrios

LinkedIn Länk till annan webbplats, öppnas i nytt fönster.

Stelio Bompai

LinkedIn Länk till annan webbplats, öppnas i nytt fönster.

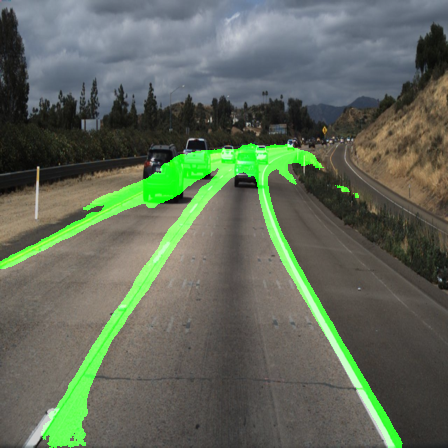

Lane detection with Deep Learning (DL) has been at the forefront of research for the autonomous driving industry. In this thesis, an innovative combination of DL models and a custom post-processing mechanism are introduced and compared to the currect state-of-the-art.

Lane detection is a crucial task in the field of autonomous driving and advanced driver assistance systems. In recent years, convolutional neural networks (CNNs) have been the primary approach for solving this problem. However, interesting findings from recent research works regarding the use of Transformer models and attention-based mechanisms have shown to be beneficial in the task of semantic segmentation of the road lane markings. In this work, we investigate the effectiveness of incorporating a Vision Transformer (ViT) to process feature maps extracted by a Convolutional Neural Network (CNN) for lane detection. We compare the performance of a baseline CNN-based lane detection model with that of a hybrid CNN-ViT pipeline and test the model over a well known dataset. Furthermore, we explore the impact of incorporating temporal information from a road scene on a lane detection model's predictive performance. We propose a post-processing technique that utilizes information from previous frames to improve the accuracy of the lane detection model. Our results show that incorporating temporal information noticeably improves the model's performance, and manages to make effective corrections over the originally predicted lane masks. Our CNN backbone, exploiting the proposed post-processing mechanism, manages to reach a competitive performance with noticeable improvements due our proposed post-processing module. However, the findings from the testing of our CNN-ViT pipeline and a relevant ablation study, do indicate that this hybrid approach might not be a good fit for lane detection.